an overview of the grammatical theories

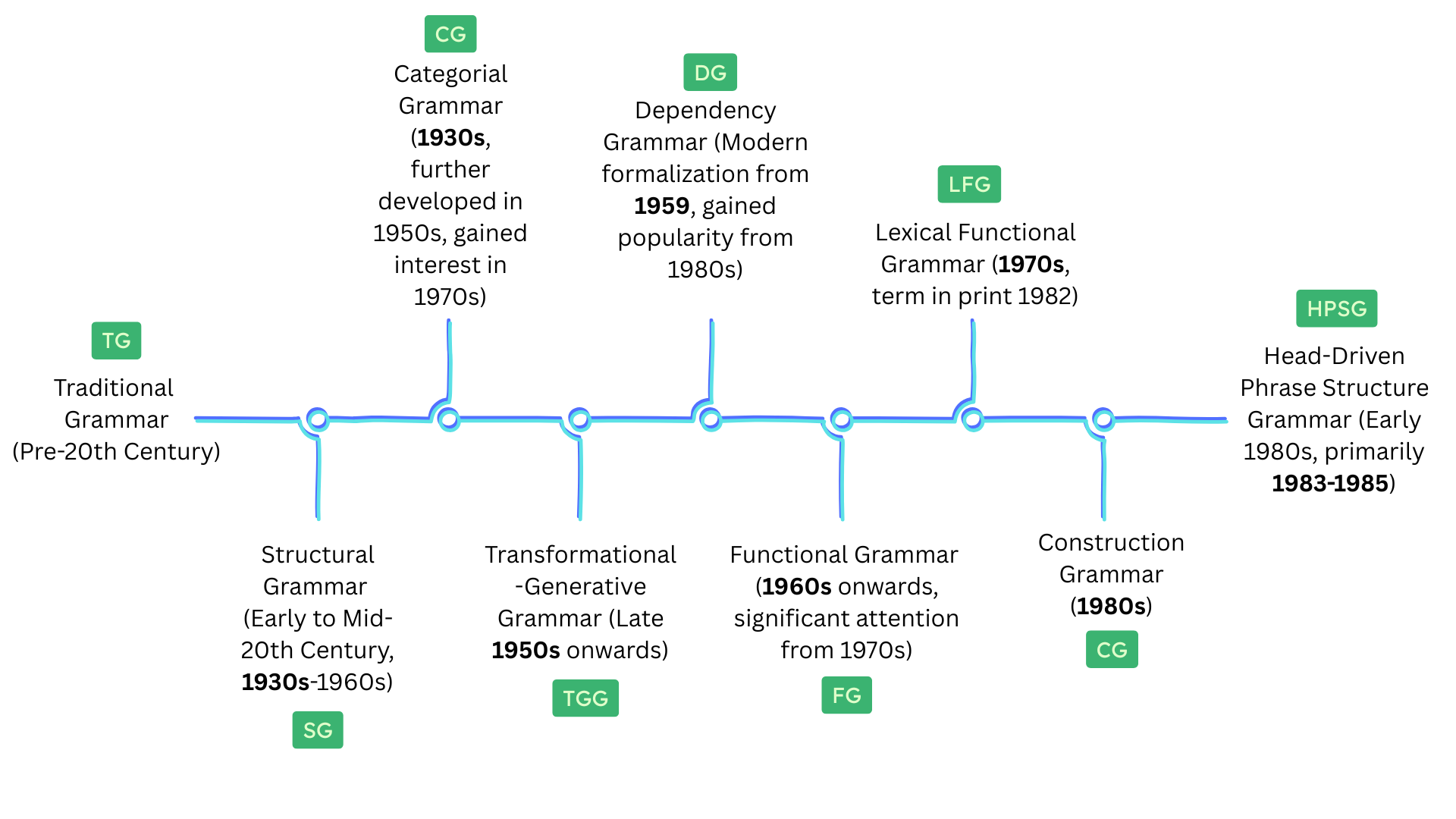

Timeline of Major Grammar Theories: Traditional Grammar (TG), Structural Grammar (SG), Categorial Grammar (CG), Transformational-Generative Grammar (TGG), Dependency Grammar (DG), Functional Grammar (FG), Lexical Functional Grammar (LFG), Construction Grammar (CG), and Head-Driven Phrase Structure Grammar (HPSG).

1. Traditional Grammar (TG)

- Period of Dominance: Predominantly before the 20th century, though its influence persists.

- Core Idea: Rooted in ancient Greek and Latin grammar, its principles have been influential for centuries, dating back to classical antiquity (e.g., Pāṇini in 6th-5th century BC India, Dionysius Thrax in 2nd century BC Greece). It focuses on prescribing “correct” usage based on established rules, often viewing Latin as the ideal model for all languages.

- Key Features:

- Prescriptive: Dictates how language should be used, rather than describing how it is used.

- Categorization: Emphasizes parts of speech (noun, verb, adjective, etc.) and their morphological forms (e.g., conjugations, declensions).

- Syntactic Analysis: Primarily sentence-level analysis, often using parsing methods to identify subject, predicate, object, etc.

- Focus on Meaning: Relies heavily on semantic notions (e.g., “a noun is the name of a person, place, or thing”).

- Limitations: Often struggles with the diversity and fluidity of natural language, sometimes imposing rules that don’t reflect actual usage.

2. Structural Grammar (SG)

- Period of Dominance: Mid-20th century (roughly 1930s-1960s).

- Core Idea: Developed as a reaction against the prescriptivism and semantic basis of Traditional Grammar. It advocates for the scientific study of language by focusing on observable linguistic data (utterances) and their distribution. Influenced by Bloomfieldian linguistics.

- Key Features:

- Descriptive: Aims to describe how language is used, without imposing external norms.

- Empirical: Relies on analyzing actual language data.

- Distributional Analysis: Identifies linguistic units (phonemes, morphemes, words) based on where and how they occur in relation to other units.

- Immediate Constituent Analysis (ICA): A method for breaking down sentences into their immediate structural components, often represented by tree diagrams.

- Form over Meaning: Prioritizes the formal arrangement of linguistic elements over their meaning in analysis.

- Limitations: Often criticized for not adequately accounting for the creativity of language or the speaker’s underlying knowledge.

3. Categorial Grammar (CG)

- Core Idea: Views grammar as a system of types, where words are assigned categories that specify their combinatory properties. It is a highly formal and mathematically oriented approach.

- Key Features:

- Functional Application: The primary mechanism for combining linguistic units. Categories are often represented as “functions” that take arguments and produce new categories.

- Lexicalized: All syntactic information is encoded in the lexical entries of words.

- Directionality: Categories specify the direction in which they combine with other categories (e.g., a verb might take a noun phrase to its right).

- No Phrase Structure Rules: Syntactic structure emerges directly from the combination of lexical categories.

- Semantic Transparency: Often aims for a direct mapping between syntactic structure and semantic interpretation.

- Varieties: Different systems exist, such as the Lambek Calculus, which has strong connections to mathematical logic.

4. Transformational-Generative Grammar (TGG)

- Period of Dominance: From the late 1950s onwards, heavily associated with Noam Chomsky.

- Core Idea: A significant paradigm shift that proposed that linguistic knowledge is innate and that speakers possess a “generative” grammar that allows them to produce and understand an infinite number of sentences. It distinguishes between deep structure (the underlying meaning) and surface structure (the actual spoken or written form).

- Key Features:

- Generative: Aims to provide a set of explicit rules that can generate all and only the grammatical sentences of a language.

- Competence vs. Performance: Distinguishes between a speaker’s underlying linguistic knowledge (competence) and their actual use of language (performance).

- Deep Structure & Surface Structure: Sentences have an abstract deep structure (representing core meaning and grammatical relations) and a surface structure (how they are pronounced). Transformations map deep structures to surface structures.

- Universal Grammar (UG): Proposes that humans are born with an innate linguistic faculty that provides universal principles common to all languages.

- Recursion: Explains how language can generate infinitely long sentences through repetitive application of rules.

- Evolution: Has undergone several revisions (e.g., Standard Theory, Extended Standard Theory, Government and Binding Theory, Minimalist Program).

5. Dependency Grammar (DG)

- Core Idea: Focuses on dependency relations between words in a sentence. Instead of a phrase-structure hierarchy (like TGG or SG), it posits that one word (the head) governs or licenses another word (its dependent).

- Key Features:

- Head-Dependent Relations: Every word in a sentence (except the main verb in some analyses) is a dependent of exactly one other word, or it’s the head of a clause.

- No Phrase Nodes: Typically doesn’t use non-terminal phrase nodes (like NP, VP) directly, although such concepts might be implicitly captured by the dependency relations.

- Direct Lexical Relations: Emphasizes direct grammatical relations between words.

- Cross-Linguistic Applicability: Often considered well-suited for languages with freer word order.

- Representation: Often uses dependency trees where arrows point from the head to its dependent.

6. Functional Grammar (FG)

- Core Idea: A broad umbrella term for theories that prioritize the function of language in communication, rather than focusing solely on its formal structure. Language is seen as a tool for interaction and meaning-making.

- Key Features (often seen in theories like Systemic Functional Linguistics by M.A.K. Halliday):

- Meaning-Oriented: Emphasizes how grammatical choices contribute to meaning in context.

- Contextual: Analyzes language in relation to its social and cultural context.

- Metafunctions: Halliday proposes three primary metafunctions of language: Ideational (representing experience), Interpersonal (enacting social relations), and Textual (organizing discourse).

- Choices and Systems: Views grammar as a system of choices that speakers make to achieve communicative goals.

- Usage-Based: Often aligns with the idea that grammar emerges from language use.

- Distinction from Formal Grammars: Unlike TGG, which often treats meaning as secondary to syntax, FG integrates meaning and function into the very fabric of grammatical description.

7. Lexical Functional Grammar (LFG)

- Core Idea: Developed by Joan Bresnan and Ronald Kaplan as an alternative to TGG. It emphasizes the importance of lexical information and parallel levels of representation for syntactic structure and functional structure.

- Key Features:

- Parallel Structures: Maintains two primary levels of representation:

- C-structure (Constituent Structure): A phrase-structure tree representing the linear order and grouping of words.

- F-structure (Functional Structure): A set of attribute-value pairs representing grammatical functions (subject, object, oblique, etc.) and semantic roles.

- Lexicalism: Much of the grammatical information and constraints are stored in the lexicon (the mental dictionary), rather than being derived by complex transformational rules.

- Non-Derivational: Unlike TGG, LFG is not transformational; instead, it uses principles of unification to link c-structure and f-structure.

- Completeness and Coherence: Constraints ensure that f-structures are well-formed and contain all necessary information.

- Focus: Particularly strong in describing grammatical relations and their mapping to argument structure.

8. Construction Grammar (CG)

- Core Idea: A family of theories that argues that grammatical knowledge consists of a network of “constructions,” which are conventional pairings of form and meaning. These constructions range from individual words to complex sentence patterns.

- Key Features:

- Construction: The central unit of analysis. A construction is a form-meaning pairing, where the form can be anything from a morpheme to a complex syntactic pattern, and the meaning can be lexical, idiomatic, or abstract grammatical meaning.

- Usage-Based: Emphasizes that grammatical knowledge is acquired and organized through experience with language use.

- Continuum of Specificity: Constructions exist at various levels of abstraction, from concrete idioms (e.g., “kick the bucket”) to highly abstract grammatical patterns (e.g., the ditransitive construction “Subj V Obj1 Obj2”).

- Inheritance Hierarchies: Constructions are related to each other through inheritance, forming a complex network.

- No Firm Form-Meaning Divide: Blurs the traditional distinction between lexicon and grammar.

- Varieties: Different schools exist, including Berkeley Construction Grammar (Fillmore, Kay, Lakoff), Radial Construction Grammar (Goldberg), and Embodied Construction Grammar.

9. Head-Driven Phrase Structure Grammar (HPSG)

- Core Idea: Developed by Carl Pollard and Ivan Sag, HPSG is a constraint-based, lexicalist, and non-derivational theory that uses typed feature structures as its primary descriptive device.

- Key Features:

- Feature Structures: All linguistic information (syntactic, semantic, phonological) is represented in complex data structures called “feature structures” (or “AVMs” - attribute-value matrices).

- Unification: Grammatical rules are essentially constraints that combine and unify these feature structures.

- Lexicalism: Like LFG, it places a heavy emphasis on the lexicon, where much of the grammatical information is stored.

- Sign-Based: Views linguistic expressions as “signs” that simultaneously contain phonetic, semantic, and syntactic information.

- Non-Derivational: Does not involve transformations between different levels of representation.

- Constraint-Based: Grammar is a system of interacting constraints rather than a series of sequential rules.

- Mathematical Sophistication: Known for its formal rigor and use of logic.

The diagram you saw covers the “big nine,” but the field is a lot richer. Here are some of the notable theories missing from that picture, grouped a bit so it’s easier to digest:

- Government & Binding (GB) — 1980s generative framework with principles and parameters.

- Minimalist Program — 1990s–present; Chomsky’s drive for economy and simplicity in grammar.

- Tree-Adjoining Grammar (TAG) — formal system for syntax and semantics, strong in computational modeling.

- Generalized Phrase Structure Grammar (GPSG) — precursor to HPSG, constraint-based.

- Systemic Functional Grammar (SFG) — Halliday; grammar as a resource for meaning in context.

- Prague School Functionalism — early 20th c.; emphasis on topic–comment and information structure.

- Role and Reference Grammar (RRG) — links syntax, semantics, and discourse across languages.

- Cognitive Grammar — Langacker; grammar as symbolic and conceptual structure.

- Usage-based Models — Bybee, Tomasello; grammar shaped by frequency and analogy.

- Embodied / Fluid Construction Grammar — constructionist models tied to cognition or computation.

- Meaning–Text Theory (MTT) — Mel’čuk; semantically oriented, models paraphrase and surface variation.

- Word Grammar — Hudson; dependency-based, network model of syntax.

- Arc Pair Grammar — 1970s relational approach to grammatical functions.

- Relational Grammar — focuses on grammatical relations like subject and object.

- Combinatory Categorial Grammar (CCG) — highly compositional, strong in computational parsing.

- DisCoCat (Distributional Compositional Categorical model) — recent hybrid of categorial grammar and vector-space semantics.

If you found this useful, please cite this as:

Tripathi, Vivek (Jun 2025). an overview of the grammatical theories. https://iamalinguist.github.io.

or as a BibTeX entry:

@article{tripathi2025an-overview-of-the-grammatical-theories,

title = {an overview of the grammatical theories},

author = {Tripathi, Vivek},

year = {2025},

month = {Jun},

url = {https://iamalinguist.github.io/blog/2025/grammatical-theories/}

}